Using AWS CloudWatch Insights To Fine Tune Your Lambda Functions

It’s not uncommon for folks that are newer to Serverless and AWS Lambda to provision their functions for what they think they will need. In fact, I would venture to guess that most of us do exactly that.

We think about the service, what it will do, what things will be reliant on it and then we sit down and code. Once we finish putting fingers to keyboard, we set out to provision our shiny new serverless service.

When it comes to provisioning our new service, we make our best guess at a couple things. We estimate much memory to give our function. We have a general sense of the amount of time we need to run our function and exit. Serverless allows us to move quickly. We take full advantage of that from the idea all the way through deployment.

But, what if our best guesses and estimation were wrong? In the event we under-provisioned our memory or set our time constraint to low we should see plenty of errors. But, what if we over-provisioned either of these?

Amazon Web Services isn’t going to raise any alarm bells for you on that fact, that rests on our shoulders. Luckily AWS has given us some tools and insights that allow us to check this scenario on our own.

In this post, we are going to take a look at how we can use AWS CloudWatch Insights to gain a better understanding of how much memory and execution time our Lambda functions are actually using. From there we can fine tune our functions to balance the cost side of things while not degrading performance.

AWS CloudWatch Insights

Back in November AWS released CloudWatch Insights. Pretty much all AWS services create logs of some kind and within those logs is usually a lot of really valuable information regarding your infrastructure.

Before this release, getting at that data and visualizing it was a few hops away. Sometimes you could do rudimentary queries on log groups. But often times you had to dump the logs somewhere and then ingest them into something that could query them.

But Amazon CloudWatch Insights removes a lot of that burden. You can use prebaked queries to get at interesting data points. Like, for example, how much memory your AWS Lambda function is actually using. You can even write your own custom queries against a variety of different AWS services. This is useful to generate reports and visualizations that are specific to your infrastructure.

We are going to be using CloudWatch Insights to explore how we can optimize the memory allocated to our functions to save a bit of money. Note that the pricing of running queries in CloudWatch Insights is $0.005 per GB of scanned data so be careful with how much data you are querying.

An example AWS Lambda function

For this blog post let’s give ourselves a realish-world scenario where we may have overprovisioned the memory of an Amazon Lambda function.

Here is a GitHub repository for a Lambda function I wrote that automates the MailChimp campaign creation for my Learn By Doing Newsletter. The function queries a Google Sheet to get the articles for a week and then creates a MailChimp campaign using an email template.

This function only runs once a week, so it’s definitely not going to break the bank. But for our exercise let’s pretend that this function was being invoked 10,000 times a week. Imagine a world where this function was multi-purpose (i.e. it could schedule MailChimp campaigns for hundreds or even thousands of newsletters a week).

With me so far? Excellent.

The implementation details of the function are less important here, but if you want to dig in a bit more, here is where things get kicked off.

What we want to take a look at is how much memory is provisioned for this around-the-clock function. We can see that by checking out the serverless.yml file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

service: sheets-automation

provider:

name: aws

runtime: nodejs8.10

region: us-west-2

functions:

generate-next-newsletter:

handler: handler.generateNewsletter

events:

- schedule: cron(0 19 ? 1-10 THU *)

environment:

googleSheetId:

mailChimpKey:

mailchimpListId:

|

Interesting, we don’t see any mention of memory in this YAML file, so what does that mean? That means that the default memory value from the Serverless Framework has been used, meaning we have provisioned 1024 MB of memory for this function.

Do we really need all of that memory for a function that reads a Google Sheet and calls a MailChimp API? Maybe, but we can actually get our answer empirically by using AWS CloudWatch Insights.

Determining the amount of overprovisioned memory

CloudWatch Insights comes with a lot of sample queries out of the box. You can use these to see recent CloudTrail events, query your VPC flow logs, see how many requests your Route53 zones are receiving and many more.

The sample query that we are interested in is the one that references over-provisioned memory. We can view this query by going logging into the AWS Console and then going to CloudWatch. Once we are in the Amazon CloudWatch service we can select the Insights link underneath Logs.

Once we are on the CloudWatch Insights page, we can select the Sample Queries dropdown and then open the Lambda selection. There we see the “Determine the amount of overprovisioned memory sample query”, go ahead and select that. We should see the query populate into the editor and it should look like this.

1 2 3 4 5 6 |

filter @type = "REPORT" | stats max(@memorySize / 1024 / 1024) as provisonedMemoryMB, min(@maxMemoryUsed / 1024 / 1024) as smallestMemoryRequestMB, avg(@maxMemoryUsed / 1024 / 1024) as avgMemoryUsedMB, max(@maxMemoryUsed / 1024 / 1024) as maxMemoryUsedMB, provisonedMemoryMB - maxMemoryUsedMB as overProvisionedMB |

The query is essentially getting the provisioned memory, the minimum amount used, the average amount used, the maximum amount used, and then calculating how over provisioned our memory is. It calculates this over the time period we select which we can see in the top right defaults to one hour.

In the query editor, we also see a dropdown menu that allows us to select the AWS resource we want to run this query against. Let’s go ahead and select our sample AWS Lambda function and run this query over the past four weeks.

When we look at the past four weeks, we see that the function ran four times. Again let’s pretend that this function was being invoked 10,000 times a week. So imagine that this function was invoked 40,000 times in the past 4 weeks. Looking at the results we see the following.

provisonedMemoryMBis 976.5625smallestMemoryRequestMBis 94.4138avgMemoryUsedMBis 95.1767maxMemoryUsedMBis 95.3674overProvisionedMBis 881.1951

Our provisioned memory is ~976 MB but our average memory use is only about 95 MB. Meaning our function is over-provisioned by ~881 MB. Pretty cool right? Yes, but what are the implications of only use ~9% of the memory we provisioned?

The simplest answer is that we are wasting resources and thus we are wasting money as well. Let’s look at the pricing breakdown.

With our Lambda function provisioned at 1024 MB of memory, it takes ~7,000 milliseconds to execute per invocation. With 10,000 invocations a week our cost breakdown looks like this.

10,000 * 4 = 40,000 invocations/month

40,000 * 7 seconds = 280,000 seconds/month

280,000 * 1024/1024 = 280,000 GB second

At a rate of $0.00001667 per GB second, we get a total cost of

280,000 * $0.00001667 = ~$4.66 per month

There are a few cents to be accounted for in this calculation to account for the costs of the request but those are pretty minuscule for this scenario.

Five bucks a month for a function that is running 40,000 times a month doesn’t seem too bad, to be honest. It’s actually not likely worth your time to optimize this, but for argument’s sake let’s say we changed our memory to 128 MB, how does that impact our cost?

10,000 * 4 = 40,000 invocations/month

40,000 * 7 seconds = 280,000 seconds/month

280,000 * 128/1024 = 35,000 GB second

At a rate of $0.00001667 per GB second, we get a total cost of

35,000 * $0.00001667 = ~$0.58 per month

Whoa, our function is now nearly 88% cheaper than it was when we were provisioned at 1024 MB of memory. OK, big deal we save a little under four bucks a month. That step alone likely isn’t going to make a noticeable dent in your AWS bill.

But remember the 1024 MB was the default memory provisioned to our AWS Lambda function when we used Serverless Framework to provision it. What if your entire team of 4-5 developers was provisioning Lambda functions as you are moving to a serverless architecture? Are they doing a lot of analysis on how much memory they actually need? Maybe, but it’s more likely that they are not.

So let’s extrapolate even further and say that you have 300 Lambda functions that have a similar performance profile to our example. Our cost for that serverless architecture would be $4.66 x 300 or $1398.00 per month when our functions are over-provisioned. If we tune our memory, the cost is significantly cheaper, $0.58 x 300 or $174 per month.

The differences are drastic for such a small change and can really save a few pennies on your AWS billing at scale.

The Fine Print

There is some fine print to talk about as it relates to reducing the amount of memory we have over-provisioned. For our example workload, we cut the memory from 1024 to 128 MB and our performance profile didn’t change. We still completed our function in ~7 seconds.

But, this isn’t always the case. There are workloads where changing our provisioned memory by 88% is going to change how long our function takes. If decreasing our memory causes our function to take 6 times as long to complete, then we are accruing more seconds per month which can cut into AWS cost savings.

On the flip side, increasing your memory allocation can result in faster invocations. For our example function, we can change the memory from 1024 MB to 2048 MB and see our execution time drop to 3.5 seconds instead of 7. Which means we can cut the execution time of our function in half without paying a single penny more than what we pay at 1024 MB.

The thing to keep in mind here is that you are going to need to experiment with fine-tuning your memory allocation. Having 900+ MB of overprovisioned memory is a clear sign that you can scale back. But scaling all the way back to 128 MB has the potential to change your performance profile depending on your workload.

Conclusion

Serverless is a very powerful architecture that can yield loads of benefits. From cost savings to freeing developers to focus on business drivers rather than infrastructure. But the cost-benefit can quickly be wiped out if functions are not configured and tuned correctly.

AWS CloudWatch Insights is a great place to start when it comes to fine tuning the memory allocated to your function. As we saw with our example function, we could save 88% a month just by tuning our function to what it actually uses. Memory is one lever that we can control that yields cloud cost savings. But, it can also be used to gain performance advantages at the same cost you are paying today.

If you have any questions about CloudWatch Insights, my weekly Learn by Doing newsletter, or my Learn AWS By Using It course feel ping me via twitter: @kylegalbraith.

If you have questions about CloudForecast and using our product to help you monitor and optimize your AWS cost, feel free to ping Tony: [email protected]

Want to try CloudForecast? Sign up today and get started with a risk-free 30 day free trial. No credit card required.

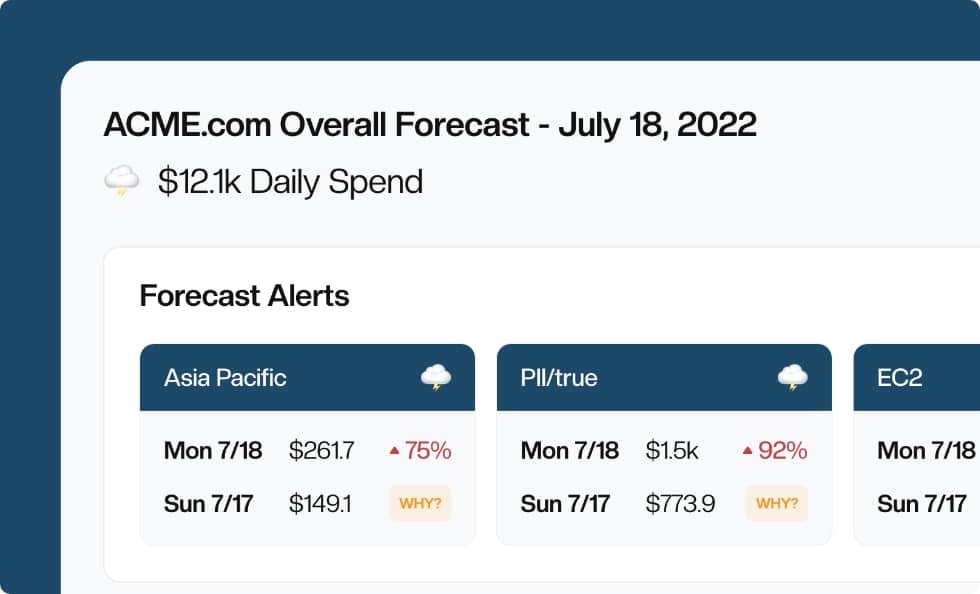

Manage, track, and report your AWS spending in seconds — not hours

CloudForecast’s focused daily AWS cost monitoring reports to help busy engineering teams understand their AWS costs, rapidly respond to any overspends, and promote opportunities to save costs.

Monitor & Manage AWS Cost in Seconds — Not Hours

CloudForecast makes the tedious work of AWS cost monitoring less tedious.

AWS cost management is easy with CloudForecast

We would love to learn more about the problems you are facing around AWS cost. Connect with us directly and we’ll schedule a time to chat!