A Comprehensive Guide to AWS Bedrock Pricing

When building cutting-edge generative AI applications, AWS Bedrock stands out as one of the most powerful services out there. It’s fully-managed, which means you can focus on developing your AI applications while AWS handles all the scaling behind the scenes.

But when it comes to pricing, things can get a little complicated. Knowing how Amazon Bedrock’s pricing works is extremely important if you want to manage costs and make the most of your resources. In this guide, we’ll walk you through everything you need to know about Bedrock pricing to keep your AI projects running smoothly, not over-budget!

What is AWS Bedrock?

First things first: AWS Bedrock is a fully-managed service designed to simplify the development and scaling of generative AI applications. Bedrock provides access to a range of high-performing foundational models (FMs) from top AI companies–all conveniently through a single API.

What are some other cool things about Bedrock? It’s serverless, so you won’t have to worry about managing servers or scaling your infrastructure manually. Bedrock also plays super nicely with other AWS favorites, such as Amazon S3 for data storage and Amazon CloudWatch for monitoring. So if you’re already on AWS, Bedrock is able to slot right in to any AI project with zero fuss.

AWS Bedrock Pricing Models

Now, onto pricing. Pricing in Bedrock isn’t too complicated per se–but there are a lot of nuances depending on how you use the service. Let’s dive into the main pricing options and a pricing example for each one.

All of the following examples assume that we use the Claude Instant model in the US East (N. Virginia) region, unless otherwise noted.

On-Demand Pricing

On-demand is super straightforward–it’s all about how many input and output tokens your models chew through. This option is perfect if your workloads tend to fluctuate, since you pay only for what you use. No strings attached.

Say you’re building an AI chatbot that answers occasional customer queries. With on-demand pricing, you don’t have to commit to a specific amount of usage–just pay as you go.

Realistically, how much might this AI chatbot cost? Let’s walk through a pricing example with the following assumptions (pricing rates in parentheses):

- Input: 10 tokens per query ($0.0008 per 1,000 input tokens)

- Output: 100 tokens per response ($0.0024 per 1,000 output tokens)

- Daily usage: Assuming an average of 1,000 queries per day which may fluctuate greatly, this equates to around 10,000 input tokens and 100,000 output tokens per day.

Given these parameters, here’s a cost breakdown:

- Input cost: 10,000 tokens / 1,000 * $0.0008 = $0.008/day

- Output Cost: 100,000 tokens / 1,000 * 0.0024 = $0.24 per day

- Total daily cost: $0.008 + $0.12 = $0.248/day

- Total monthly cost: $0.128/day * 30 days = $7.44/month

Overall, on-demand pricing gives you flexibility without any long-term commitments. Perfect for when you expect your workload to vary day by day.

Provisioned Throughput Pricing

Provisioned throughput pricing is designed for situations where you need that rock-solid, consistent performance. With this model, you reserve capacity ahead of time, which guarantees throughput and smooth performance. You’re billed hourly per model unit.

With provisioned throughput pricing, you can choose between 1-month and 6-month commitments. The longer the commitment, the better the rate.

One good use case for a provisioned throughput model is a machine translation service that constantly processes a high volume of data. Here’s a pricing example:

- Workload: Predictable, at 1,000,000 input tokens per hour

- Commitment: You make a 1-month commitment for 1 unit of a model, which costs $39.60 per hour.

Given these parameters, it’s easy to calculate the cost breakdown:

- Hourly cost: $39.60 per model unit

- Monthly cost: 24 hours/day * 30 days * $39.60/hour = $28,512/month

Yes, that’s a hefty price tag. But it’s well-suited for larger, established organizations running big projects with steady workloads. To determine if this is worth it, make the equivalent calculation for the on-demand model, and compare the result.

Provisioned throughput pricing gives you the peace of mind of consistent performance at a predictable cost–great for large, steady workloads.

Batch Processing

With batch processing, you can submit a set of prompts as a single input file, making it efficient for processing a large dataset all at once. The pricing calculation is very similar to on-demand, with one important caveat: you’ll often get a 50% discount for batch processing for supported FMs compared to on-demand processing.

This discount is great news: who doesn’t love saving money? Unfortunately, batch processing isn’t supported for all models. Refer to this documentation page for the full list of model support.

For this pricing example, since Claude Instant doesn’t currently support batch processing, we’ll run the numbers as if it were supported. That means its usual price of $0.0008 per 1,000 tokens is discounted to $0.0004 per 1,000 tokens. Suppose you have a database of customer reviews, and want to process them with sentiment analysis. Here are our assumptions:

- Dataset: 1 million customer reviews; each review takes 100 tokens to process

- Model rate: $0.0004 per 1,000 tokens

Now, the cost breakdown:

- Total tokens: 1,000,000 reviews * 100 tokens = 100,000,000 total tokens

- Total cost: 100,000,000 tokens / 1,000 * $0.0004 = $40 for the batch

Batch processing is great for efficiently handling large datasets, and you get a nice discount compared to on-demand processing.

Model Customization

Model customization is where you take an FM and make it your own by fine-tuning it with your own data. This pricing here is based on two factors: first, the number of tokens processed, and second, the monthly storage fees for the customized model.

Model customization is a game-changer for businesses that need AI solutions to fit their specific needs. One example would be to create a language model for accurate product recommendations or specific customer interactions. Suppose you wanted to do this with the following assumptions:

- Dataset: Suppose fine-tuning the model requires 100,000,000 tokens

- Storage: After customization, suppose the model size is 100GB ($0.05 per GB)

Here’s the cost breakdown for this job:

- Customization cost: 100,000,000 tokens / 1,000 * $0.002 = $200

- Storage cost: 100GB * 0.05 per GB = $5/month

- Total initial cost: $200 + $5 = $205, $5 each month thereafter

Customizing a model gives you a powerful AI solution tailored to your needs, with costs primarily driven by the size of the dataset and the storage required.

Model Evaluation

Model evaluation is a flexible option that lets you test different models before committing to further use. With no volume commitments, you only pay based on how much you use.

If you’re still in the early stages of a project where you’re testing out different models to see which works best for your specific task, you’re doing model evaluation. One canonical example of a task is an image classification project. Here are some assumptions for a pricing exercise:

- Workload: Let’s say you run 20 evaluation jobs, each requiring 100,000 tokens.

- Cost: The evaluation cost is $0.002 per 1,000 tokens.

Here’s the cost breakdown for this entire operation:

- Job: 100,000 / 1,000 * 0.002 = $2

- Total: 20 * $2 = $40

Model evaluation is crucial for helping you determine the best-fit model without committing to large-scale usage upfront.

Cost Optimization Strategies for AWS Bedrock

Before we dive into cost-saving tips, let’s quickly highlight a few challenges that can make AWS Bedrock pricing tricky to manage:

- Dynamic usage patterns: Your workloads can fluctuate, causing unpredictable costs.

- Complexity of cost structures: There are several pricing models and different rates for different foundation models (FMs), which can be overwhelming.

- Visibility into costs: Without proper monitoring, it’s tough to see exactly where your money is going.

The good news is you can tackle all of these issues with a few simple strategies.

Monitoring Your Workloads

Want to optimize your Bedrock costs? Start by keeping an eye on your usage. AWS provides great tools like AWS CloudWatch, which lets you monitor real-time metrics such as token usage and model activity. Here are some essential features of CloudWatch to take advantage of:

- Custom dashboards: Track the key metrics that matter the most to your application. This can be input/output tokens, or model performance.

- Alarms: Set a few usage thresholds such that CloudWatch will notify you before things get too expensive.

Additionally, you can utilize AWS CloudTrail to log API calls. This tool gives you a handy audit trail that shows who is using what resources, and how. Stay on top of your workloads, and those surprise charges won’t have a chance to sneak up on you.

Optimizing Model Usage

Bedrock offers a wide selection of FMs. While it’s tempting to go for the “best” or the “cheapest”, the real key is finding the most cost-effective option for your specific use case.

Not all models are created equal. Some are total overkill for certain applications. For example, if you’re working on a simple text classification task, there’s no need to use a complex, expensive model. Instead, choose a less complex FM that’s cheaper and better suited to your needs. This saves you both processing and token costs, without sacrificing too much on the quality your application requires.

By matching your model choice to the complexity of your task, you can get the job done efficiently and avoid unnecessary expenses.

Utilizing Reserved Capacity

For larger organizations, one of the most effective ways to cut down on AWS Bedrock costs is by using Provisioned Throughput. This model allows you to reserve capacity and guarantee consistent performance, which often leads to significant savings compared to On-Demand pricing.

The thing is, this really only applies to huge, but stable workloads. The pricing rates for provisioned throughput are probably too high for most small, independent use cases.

Of course, there is a way to know for sure whether to switch to provisioned throughput–by monitoring your usage. This gives you the data to make smart, informed decisions about whether reserving capacity is worth it.

Batch Processing for Cost Efficiency

Again for larger datasets, setting up batch processing can be a smart way to reduce costs in AWS Bedrock. Rather than processing data on-demand and in real-time, batch processing allows you to submit large datasets as a single input file, which can be more cost-effective.

This is best seen in use cases like sentiment analysis or language translation, where you can process everything as a batch, rather than analyzing each text individually. By bundling multiple requests into one big batch, you can cut down on token usage and streamline your workflows.

AWS Tags and Cost Categories for Cost Visibility

To get a clearer picture of AWS Bedrock expenses, start by implementing resource tags and organizing them with AWS Cost Categories. Tagging allows you to track costs by project, team, or environment, ensuring accountability across your organization.

Leveraging AWS Cost Categories, you can then group these tagged resources into customized spending buckets, aligning costs with business objectives. Together, these strategies provide transparency and control, empowering you to make informed decisions and optimize your AWS Bedrock usage effectively.

We wrote up two helpful guides on tagging resources and also cost categories:

- AWS Cost Categories: A Better Way to Organize Costs

- AWS Tags Best Practices and AWS Tagging Strategies

Conclusion

AWS Bedrock is a powerhouse for building and scaling generative AI applications, but its pricing models can be complex. Effective cost monitoring and management is crucial to ensure you’re getting the most value without overspending. This includes choosing the right model, and using batch processing or provisioned throughput when appropriate.

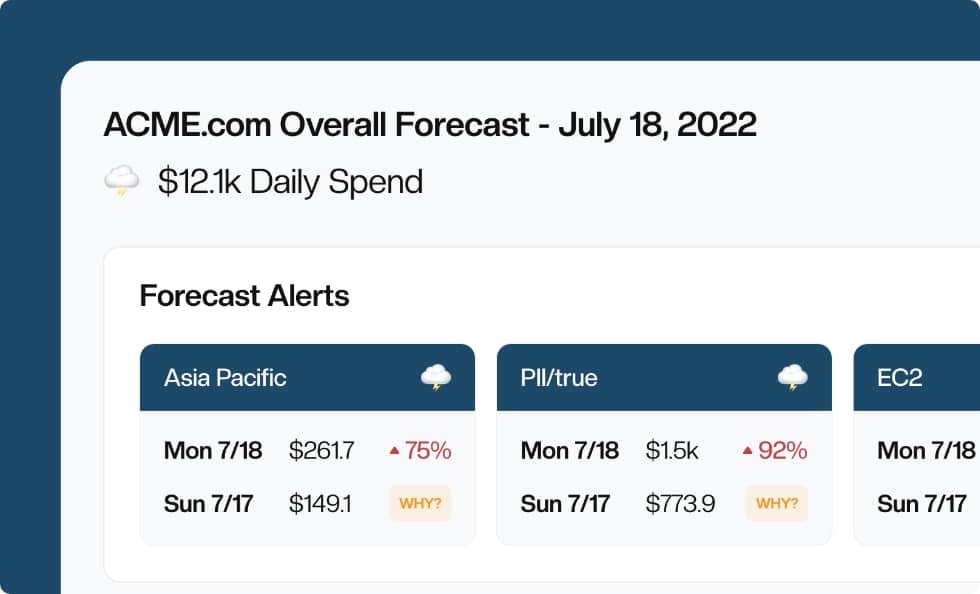

Need a little extra help? Check out tools like CloudForecast, which makes tracking your Bedrock (and overall AWS) spending a breeze. CloudForecast can help you proactively catch those sneaky cost spikes early to keep your operation running smoothly.

Manage, track, and report your AWS spending in seconds — not hours

CloudForecast’s focused daily AWS cost monitoring reports to help busy engineering teams understand their AWS costs, rapidly respond to any overspends, and promote opportunities to save costs.

Monitor & Manage AWS Cost in Seconds — Not Hours

CloudForecast makes the tedious work of AWS cost monitoring less tedious.

AWS cost management is easy with CloudForecast

We would love to learn more about the problems you are facing around AWS cost. Connect with us directly and we’ll schedule a time to chat!