Using kOps to Deploy Kubernetes to AWS

Kubernetes becomes more efficient the more it’s automated—it’s in your best interest as far as resource management goes to ensure that your team is putting processes in place to automate as many repetitive tasks as possible. Enter kops, short for Kubernetes Operations. Simply defined, it automates the provisioning of Kubernetes clusters in AWS and GCE. To get a bit more specific, kops enables and manages access to applications, data, servers, and networks, saving your Kubernetes developers valuable man hours.

Installing Kubernetes on AWS with kops

This tutorial shows you how to quickly setup, configure, and deploy Kubernetes on AWS using kops.

Prerequisites

Before setting up Kubernetes on AWS, you need:

- an AWS account

- AWS CLI installed

- a domain to access the Kubernetes API

- a hosted zone in Route53 and point the AWS server to your domain

- an IAM user with full S3, EC2, Route53, and VPC access

Step 1. Installing kops

Given that Linux is the most used operating system for the cloud, the code examples will be for Linux.

Use the following commands to install KOPs.

This is basically a command-line utility for downloading the latest Kubernetes release file from GitHub:

wget -O kops https://github.com/kubernetes/kops/releases/download/$(curl -s https://api.github.com/repos/kubernetes/kops/releases/latest | grep tag_name | cut -d '"' -f 4)/kops-linux-amd64This line modifies the execution permission to the kops directory:

chmod +x ./kops

This cuts and pastes kops to the bin directory:

sudo mv ./kops /usr/local/bin/

If none of these commands gives an error, you have successfully installed kops.

Step 2. Installing kubectl

To download the latest release, make sure you’re following the latest Kubectl installation docs.

To check the available commands for kubectl, you can run kubectl from your terminal, which will provide all the help commands. You should receive something similar to this list:

kubectl controls the Kubernetes cluster manager.

Find more information at https://github.com/kubernetes/kubernetes.

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and

expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

run-container Run a particular image on the cluster. This command is

deprecated, use "run" instead

Basic Commands (Intermediate):

get Display one or many resources

explain Documentation of resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or

by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

rolling-update Perform a rolling update of the given ReplicationController

scale Set a new size for a Deployment, ReplicaSet, Replication

Controller, or Job

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

Advanced Commands:

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource using strategic merge patch

replace Replace a resource by filename or stdin

convert Convert config files between different API versions

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or

zsh)

Other Commands:

api-versions Print the supported API versions on the server, in the form of

"group/version"

config Modify kubeconfig files

help Help about any command

plugin Runs a command-line plugin

version Print the client and server version information

Use "kubectl --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all

commands).

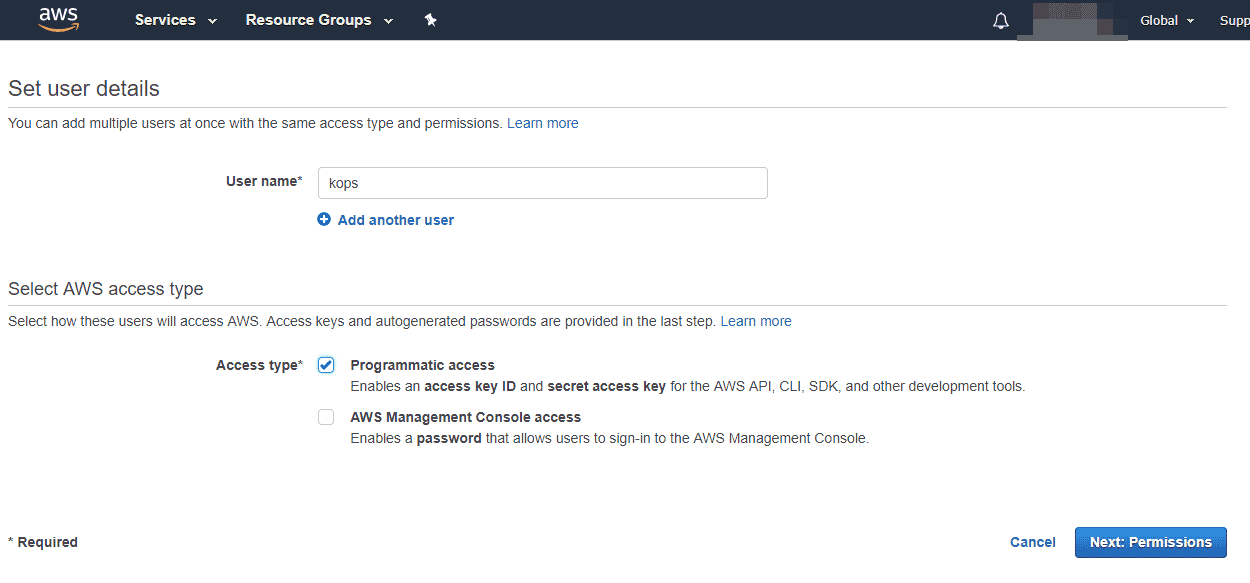

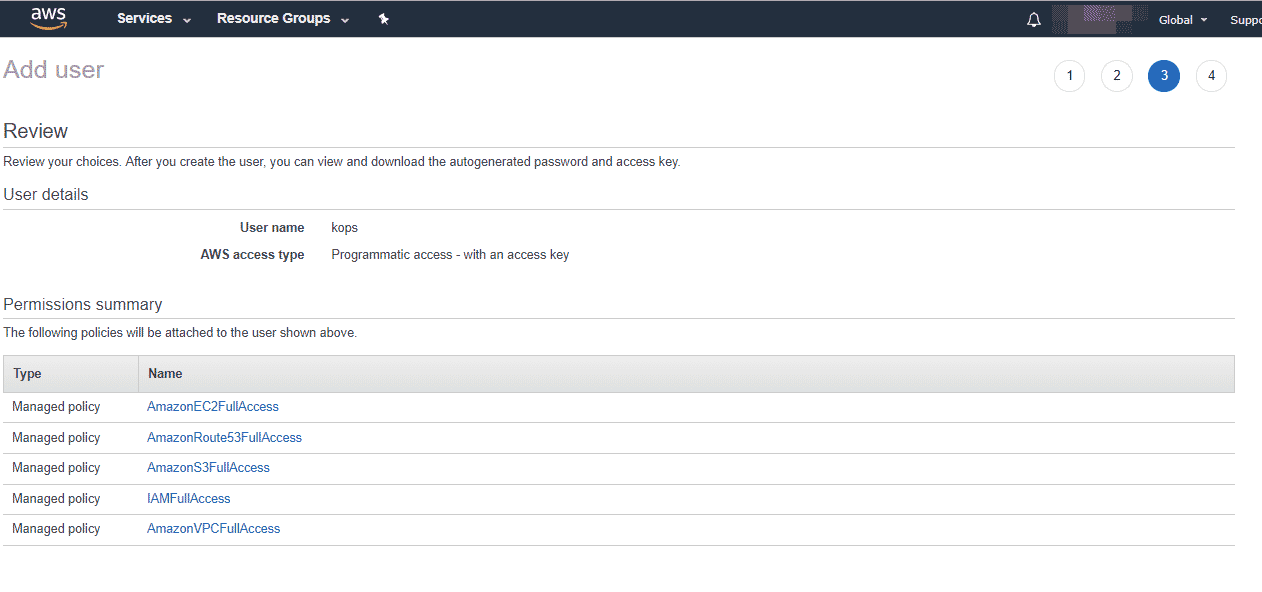

Step 3. Creating a New IAM User on the AWS Console

Log in to your AWS console, select users, and then add users to create a new user with programmatic access. kops will connect to this user and store the cluster state information.

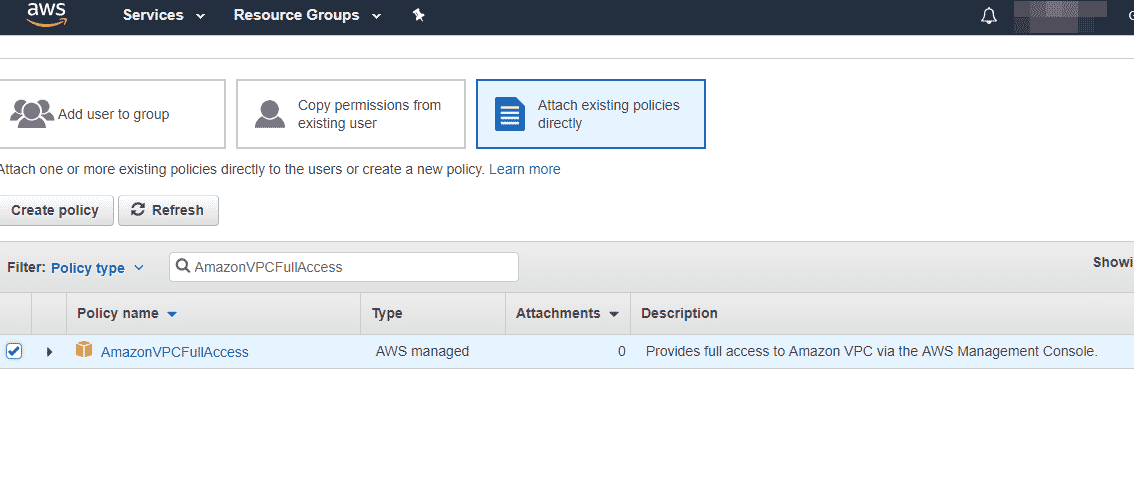

Set the following permissions for this user, then click Next.

- AmazonEC2FullAccess

- AmazonRoute53FullAccess

- AmazonS3FullAccess

- IAMFullAccess

- AmazonVPCFullAccess

The AWS console will require you to review your permissions, then confirm by clicking Next.

You should get a success message on your console. Remember to record your access key and secret access key—you’re going to need them right away.

To set the environment variable, run the following commands using your access key and secret key:

export AWS_ACCESS_KEY_ID=<replace with your access key>

export AWS_SECRET_ACCESS_KEY=<replace with your secret key>Step 4. Configuring DNS and Route53

To build a Kubernetes cluster with kops, you need to have the required DNS records, so you need to prepare where these records will be built. There are several ways to go about this depending on your situation. For this article, I assume you have a domain purchased or hosted via AWS.

If you have a domain purchased or hosted via AWS

In this situation, you should already have a hosted zone in Route53, so you don’t need to do anything else. Your Kubernetes records will look like:

etcd-us-east-1c.internal.clustername.yourdomain.comIf you have a subdomain under a domain purchased or hosted via AWS

Here, you will need to have all your Kubernetes records under the subdomain of the parent domain. This means that you will have to create a second hosted zone in Route53. After that, you will have to set up route delegation to the new zone. As a result, the Kubernetes records will look like:

etcd-us-east-1c.internal.clustername.subdomain.yourdomain.comTo copy the NS servers of your subdomain to your parent domain in Route53, you will need to have jq, a lightweight command-line JSON processor, locally installed .

If you haven’t created a subdomain yet, the following is the command you will use. Otherwise, move on to the next command.

ID=$(uuidgen) && aws route53 create-hosted-zone --name subdomain.yourdomain.com --caller-reference $ID |

jq .DelegationSet.NameServers

Make sure to note your parent-hosted zone ID.

aws route53 list-hosted-zones | jq '.HostedZones[] | select(.Name=="yourdomain.com.") | .Id'

Next, create a new JSON file subdomain.json with your values.

{

"Comment": "Create a subdomain NS record in the parent domain",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "subdomain.yourdomain.com",

"Type": "NS",

"TTL": 300,

"ResourceRecords": [

{

"Value": "ns-1.<yourdomain-aws-dns>-1.co.uk"

},

{

"Value": "ns-2.<yourdomain-aws-dns>-2.org"

},

{

"Value": "ns-3.<yourdomain-aws-dns>-3.com"

},

{

"Value": "ns-4.<yourdomain-aws-dns>-4.net"

}

]

}

}

]

}

From here, you can apply the subdomain NS records to the parent-hosted zone using the following command:

aws route53 change-resource-record-sets

--hosted-zone-id <parent-zone-id>

--change-batch file://subdomain.json

Step 5. Creating a New S3 Bucket

To store the state and representation of your cluster, you need to create a dedicated S3 bucket of kops. This bucket will essentially be a source for your cluster configuration, which will include things like the number of nodes you have present, the type of instance, and the Kubernetes version you have installed.

Use the following command to create the bucket:

aws s3api create-bucket –-bucket <replace this with the name of your bucket>Remember to version your bucket in case you ever need to revert or recover a previous version of the cluster. For this, you will use the following command:

aws s3api put-bucket-versioning --bucket <replace this with the name of your bucket> --versioning-configuration Status=EnabledStep 6. Creating a Cluster

Now it’s time to create a cluster. Run the following command to set the environment variables:

kops create cluster

--name cluster.kubernetes-aws.io

--zones us-west-2a

--state s3://<the name of your bucket which is the state store>

--yesLet’s break down this command:

zonedefines the zones in which the cluster is going to be creatednamedefines the name of the clusterstatepoints to the S3 bucket that is the state storeyesimmediately creates the cluster

Don’t forget to replace

cluster.kubernetes-aws.iowith your actual domain.

Congratulations! You have just managed to create a cluster. From here, you can use the kubectl command to interact with the cluster.

Note that all the instances created by kops will be built within auto-scaling groups. This means that each instance will be automatically monitored and rebuilt by AWS in case of failure.

Testing the Cluster

If you want to check that the API is working, simply use the kubectl command to check the nodes:

kubectl get nodesThe output from this command will be a list of all the nodes that match the –zone flag defined earlier. This is how you test your cluster. And don’t forget the kubectl command from earlier that can show you all of the possible commands for your cluster.

Deleting the Cluster

Kubernetes is good for a development workflow, but it’s expensive to run within AWS. At some point, you may need to delete a cluster. Before you do that, be sure to preview and confirm all the AWS resources that will be destroyed when the cluster is deleted. You can do this using the following command:

kops delete cluster --name <the name of your cluster> --state=s3://<the state of your cluster>When you’re sure that you want to delete your cluster, you need only run the command again with an extra --yes flag:

kops delete cluster --name <the name of your cluster> --state=s3://<the state of your cluster> --yesUsing kops to Generate Terraform Configuration

There are a lot of definitions for infrastructure as code (IaC) out there. A simple definition is that it’s the “process of managing and provisioning cloud resources through machine-readable definition files.” IaC statically declares these resources in code, then deploys and manages them through code.

Terraform is an IaC tool that facilitates a CLI workflow. It forms a layer of abstraction that unifies all resources and then uses a common configuration language to describe the infrastructure as code.

kops is able to generate Terraform configurations that you can apply using terraform plan and terraform apply. So instead of letting kops apply the changes, you simply tell kops what you want done, then it spits out those tasks into a .tf file.

Step 1. Setting Up a Remote State

This is not really a must-do, but it’s strongly recommended that you save your state on S3 with versioning turned on. In your Terraform configuration file, use the following settings to make sure that remote S3 is configured properly.

terraform {

backend "s3" {

bucket = "my bucket"

key = "path/to/my/key"

region = "us-east-1"

}

}Let’s break down this snippet:

my bucket: replace this with the name of your S3 bucket.key: this is the file path within the S3 bucket where the Terraform state file should be written.region: this is the AWS region where your bucket lives. Change this to the region of the S3 bucket you created.

Step 2. Creating a Cluster

The following command is for a complete setup:

kops create cluster

--name=<the name of your cluster>

--state=s3://< your bucket name >

--dns-zone=<the Route53 hosted zone for the your name>

[... your other options ...]

--out=.

--target=terraformThis will create a kops state on the S3 and output a representation of your configuration into .tf files.

From here, you can preview your changes in kubernetes.tf and then use Terraform to create all the resources. Remember to keep the kops state as the ultimate source of truth.

Step 3. Initializing Terraform to Set Up the S3 Backend and Provider Plugins

This step requires just one command:

terraform initIt displays nothing on the screen unless you’re using Terraform V0.12.26. In that case, you’ll receive the following warning:

Warning: Provider source not supported in Terraform v0.12

on kubernetes.tf line 665, in terraform:

665: aws = {

666: "source" = "hashicorp/aws"

667: "version" = ">= 2.46.0"

668: }A source was declared for provider AWS. Terraform v0.12 does not support the provider source attribute, so it’ll be ignored. Nothing to worry about.

Step 4. Reviewing and Creating the Cloud Infrastructure and Kubernetes Cluster

This step requires only two commands:

terraform plan

terraform applyWait for the cluster to initialize, and congratulations! You have successfully created a working Kubernetes cluster.

With Terraform, you can also make changes to your infrastructure as defined by kops:

kops edit cluster

--name=<the name of your cluster>

--state=s3://<the name of your S3 bucket>After the editor opens, make your changes, then run the update command with --target and --out parameters, like so:

kops update cluster

--name=<the name of your cluster>

--state=s3://<the name of your bucket>

--out=.

--target=terraformPreview your changes, then apply them using the following commands.

terraform plan

terraform applyNote that the command kops edit cluster is not limited—you can also edit instances and groups. However, some changes will require you to apply the kops rolling-update command. You can actually go ahead and run it just to check if any nodes need to be updated.

Conclusion

kops brings a powerful flexibility to your cluster setup’s version control. It also supports the generation of Terraform configuration files for your resources instead of you having to create them directly—a pretty nifty feature if you’re into using Terraform.

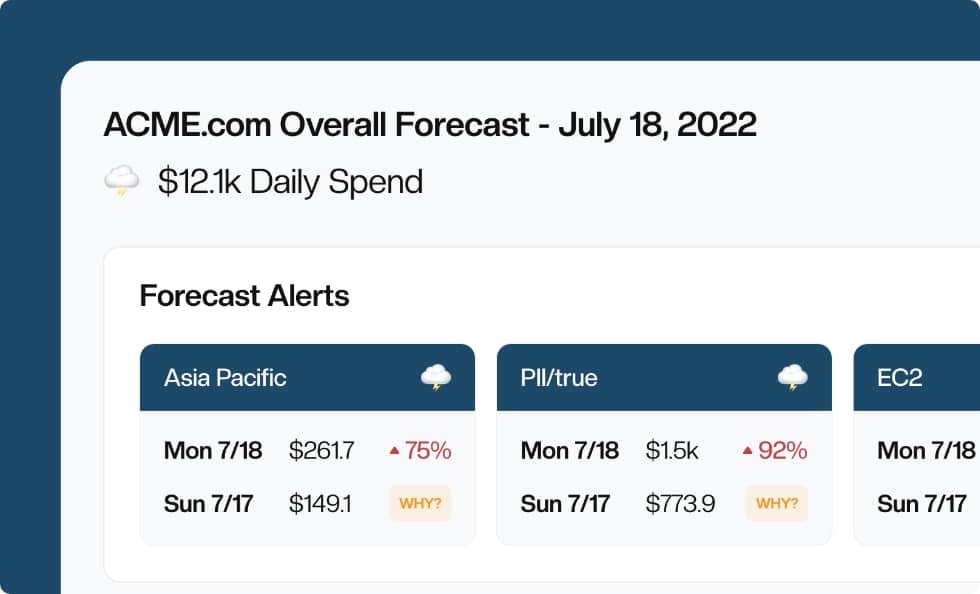

Want to better understand and control your Kubernetes costs? Check out Barometer by CloudForecast to stay efficient and cost-effective.

Manage, track, and report your AWS spending in seconds — not hours

CloudForecast’s focused daily AWS cost monitoring reports to help busy engineering teams understand their AWS costs, rapidly respond to any overspends, and promote opportunities to save costs.

Monitor & Manage AWS Cost in Seconds — Not Hours

CloudForecast makes the tedious work of AWS cost monitoring less tedious.

AWS cost management is easy with CloudForecast

We would love to learn more about the problems you are facing around AWS cost. Connect with us directly and we’ll schedule a time to chat!