Analyzing The Cost Of Your AWS Serverless Functions Using Faast.js

What is faast.js?

Faast.js is an open source project that streamlines invoking serverless functions like AWS Lambda. It allows you to invoke your serverless functions as if they were regular functions in your day to day code. But the benefits don’t stop there. It allows you to spin up your serverless infrastructure when the function is actually invoked. No more upfront provisioning of your serverless environments.

This is an interesting take on Infrastructure as Code. With Faast.jr we are no longer defining our infrastructure inside of a language like HCL or YAML. Instead, this is more akin to Pulumi where our infrastructure lives in the code we actually use in our services. But with the big difference that our infrastructure is provisioned when our function is called.

But wait, if my infrastructure is allocated on demand for my serverless pipeline, how will I know what it costs to run it?

Faast.js has you covered there as well. You can estimate your costs in real time using the cost snapshot functionality. If you need a deeper look you can use the cost analyzer to estimate the cost of many configurations in parallel.

In this post, we are going to explore how we can use faast.js to provision a serverless function in AWS Lambda. We are going to create a simple serverless function and invoke it using faast.js to see how our workload is dynamically created and destroyed. We will also dive into some of the slick features like cost analysis.

Our serverless function using faast.js

To get started we first need to have our AWS CLI configured. This is required for faast.js to know which cloud provider our serverless function is using. By installing the CLI, the AWS Command Line Interface, with the correct access keys our faast setup will detect that we are using AWS Lambda for our environment.

Once we are all configured to use AWS as our cloud provider we can get started with faast by installing the library into our project.

npm install faastjs

Next, let’s create our serverless function implementation inside of a file named functions.js. Our function is going to be very simple for this blog post. We want to focus on the benefits faast provides but we need a realistic serverless function to do that.

An important thing to remember when using Faast.jr is that our serverless function must be idempotent. This means that it takes an input and produces the same output every time it is invoked with that. This is because the abstraction faast provides leaves the door open to functions being retried.

For our purpose let’s create a simple function that takes an array of numbers and multiplies them, returning the result. This is a naive example but it will allow us to demonstrate how we can use faast to scale out our invocations as well as estimate the cost of our function. It’s also a basic example of idempotency, the same two inputs will always result in the same product.

Let’s dive into what the code looks like for our serverless function.

1 2 3 |

exports.multiply = function(numbers) {

return numbers.reduce((currTotal, num) => currTotal * num);

}

|

Pretty straightforward right? We have a one-line function that takes an array of numbers and returns the final product of them all.

Now that we have our basic serverless function, let’s incorporate faast.js into our setup. Inside of our index.js file we are going to start off by creating some random number arrays. We can then use those arrays to invoke our serverless function many times in parallel.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

const { faast } = require("faastjs"); const funcs = require("./functions"); async function main() { const testArrays = []; for(let i = 0; i <= 1000; i++) { var randomLength = Math.floor((Math.random() * 10) + 1); var arr = []; for(let k = 1; k <= randomLength; k++) { arr.push(k); testArrays.push(arr); console.log("Invoking serverless functions"); await invokeFunctions(testArrays); console.log("Done invoking serverless functions"); } |

Here we are generating 1000 random length arrays and then passing them to our invokeFunctions function. It is that function that makes use of faast to invoke our multiplication serverless function in parallel.

1 2 3 4 5 6 7 8 9 10 11 12 |

async function invokeFunctions(arrays) { const invoker = await faast("aws", funcs); const promises = [] for(let i = 0; i < arrays.length; i++) { promises.push(invoker.functions.multiply(arrays[i])) const results = await Promise.all(promises); await invoker.cleanup(); console.log("Invocation results"); console.log(results); } |

Our invokeFunctions method creates our faast invoker. It then invokes our multiply function for each test array we passed into it. Our function invocation returns a promise that is added to a promises array where we can await on all our invocations. Once all our serverless functions complete we call the cleanup method on our invoker to destroy the infrastructure that was created.

Running our serverless function

Now that we have our serverless function and the outer invocation logic that faast will use to invoke it, it’s time to test things out.

This is done with a node call to our entry point script. From the root of the directory where our code lives, run the following commands. Note that .js should be replaced with the name of the file where the faast js invoker calls your serverless function.

1 2 |

$ npm install $ node src/.js |

That’s it! We just invoked our serverless function via the faast.js framework. We should see logs in our output that look something like this.

1 2 3 4 5 6 7 8 9 10 11 |

$ node src/index.js Invoking serverless functions Invocation results [ 720, 6, 40320, 720, 3628800, 120, 3628800, .....] |

Pretty cool right? We were able to write our serverless function in its own module and then invoke it as if it was any old function from our code using faast.js. There was no upfront provisioning of our AWS infrastructure. No need to handle retries or errors, and everything was cleaned up for us.

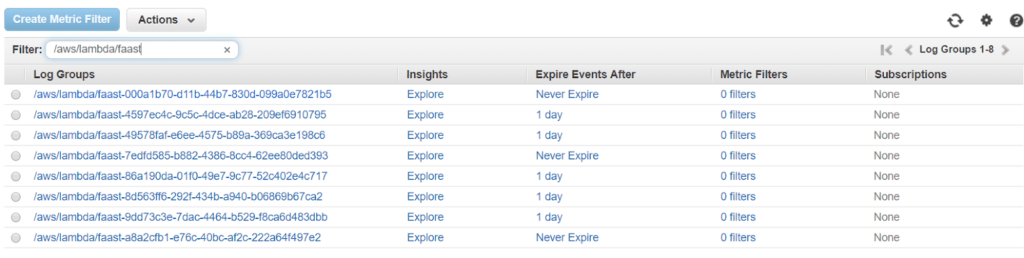

We can see this for ourselves by checking out the AWS CloudWatch log groups that were created for each of our functions. You can view these logs by going to CloudWatch Logs in your AWS account and then filtering for the prefix /aws/lambda/faast.

This is an exciting take on infrastructure as code. It removes the need to provision infrastructure ahead of time. We don’t have to configure these Amazon Lambda functions ahead of time, they are created dynamically on when our faast module is invoked. That alone is very exciting because it allows developers to invoke serverless workloads as if they were functions in our everyday code.

But it gets even better.

How much did our invocations cost?

With great power comes the risk of doing things very wrong. Or put in terms of AWS, getting a high bill at the end of the month because you got some configuration wrong.

It turns out faast can help us out with that as well with their built-in cost analyzer. Let’s update our logic to make use of the cost analyzer so we can see a breakdown of what our invocations are costing us.

All we need to do is invoke a function called costSnapshot on our faast invoker. So we add that below to see a full breakdown of what our serverless invocations are costing us. Here is the updated code that handles this.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

async function invokeFunctions(arrays) { const invoker = await faast("aws", funcs); const promises = [] for(let i = 0; i < arrays.length; i++) { promises.push(invoker.functions.multiply(arrays[i])) const results = await Promise.all(promises); await invoker.cleanup(); console.log(results); const costSnapshot = await invoker.costSnapshot(); console.log(costSnapshot.toString()); } |

So what does our current serverless pipeline cost us? Here is the log output from the call to costSnapshot.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

functionCallDuration $0.00002813/second 100.1 seconds $0.00281588 91.9% [1]

functionCallRequests $0.00000020/request 1001 requests $0.00020020 6.5% [2]

outboundDataTransfer $0.09000000/GB 0.00052891 GB $0.00004760 1.6% [3]

sqs $0.00000040/request 0 request $0 0.0% [4]

sns $0.00000050/request 0 request $0 0.0% [5]

logIngestion $0.50000000/GB 0 GB $0 0.0% [6]

---------------------------------------------------------------------------------------

$0.00306368 (USD)

* Estimated using highest pricing tier for each service. Limitations apply.

** Does not account for free tier.

[1]: https://aws.amazon.com/lambda/pricing (rate = 0.00001667/(GB*second) * 1.6875 GB = 0.00002813/second)

[2]: https://aws.amazon.com/lambda/pricing

[3]: https://aws.amazon.com/ec2/pricing/on-demand/#Data_Transfer

[4]: https://aws.amazon.com/sqs/pricing

[5]: https://aws.amazon.com/sns/pricing

[6]: https://aws.amazon.com/cloudwatch/pricing/ - Log ingestion costs not currently included.

|

Here we see that we had 1001 function requests with a total duration of 100 seconds and a small fraction of outbound data transfer. All of this for a total of $0.003 cents.

Putting it all together

What we have demonstrated is that we can build a serverless function that requires no upfront infrastructure. Our multiply function is provisioned on the fly via faast. We can even dump cost snapshots from faast to see what our invocations are costing us as a whole and on a per request basis.

What this allows us as developers to do is to abstract away the serverless world but still gain all the advantages of it.

Imagine if our invoker wrapper wasn’t a script that we run from the command line but rather another function that is invoked in an API that we are building. The developer of the API needs to only know how to invoke our function in JavaScript. All the serverless knowledge and infrastructure is completely abstracted from them. To their code, it’s nothing more than another function.

This is a great abstraction layer for folks that are new to the serverless world. It gives you all the advantages of it without climbing some of the learning curve.

But, it does come with a cost. Done wrong our serverless costs could go through the roof. If the API developer invokes our function in a while loop without understanding the ramifications of that, our AWS bill at the end of the month could make us cry.

Conclusion

Faast.js is a very cool idea from a serverless and infrastructure as code perspective. The best code is the code you never have to write. Faast gives us that by provisioning our infrastructure for us when we need it. It also allows us to treat our serverless workloads as just another function in our code.

It does come with a cost and some hiccups that might not fit all use cases. For example the role that is created for the AWS Lambda functions has Administrator access and there is no way to configure that. Not a security best practice. There is also the case where other resources can be left lying around in your account if the cleanup method is not called.

These are things that I am sure the project are looking to address. In the meantime I would suggest trying out Faast in a development/test context to gain an understanding of what your serverless workloads are going to cost you at scale.

If you have any questions about Faast.js or serverless in general feel free to ping me via twitter @kylegalbraith. Also checkout my weekly Learn by Doing newsletter or my Learn AWS By Using It course to learn even more about the cloud, coding, and DevOps.

If you have questions about CloudForecast to help you monitor and optimize your AWS cost, feel free to ping Tony: [email protected]

Want to try CloudForecast? Sign up today and get started with a risk-free 30 day free trial. No credit card required.

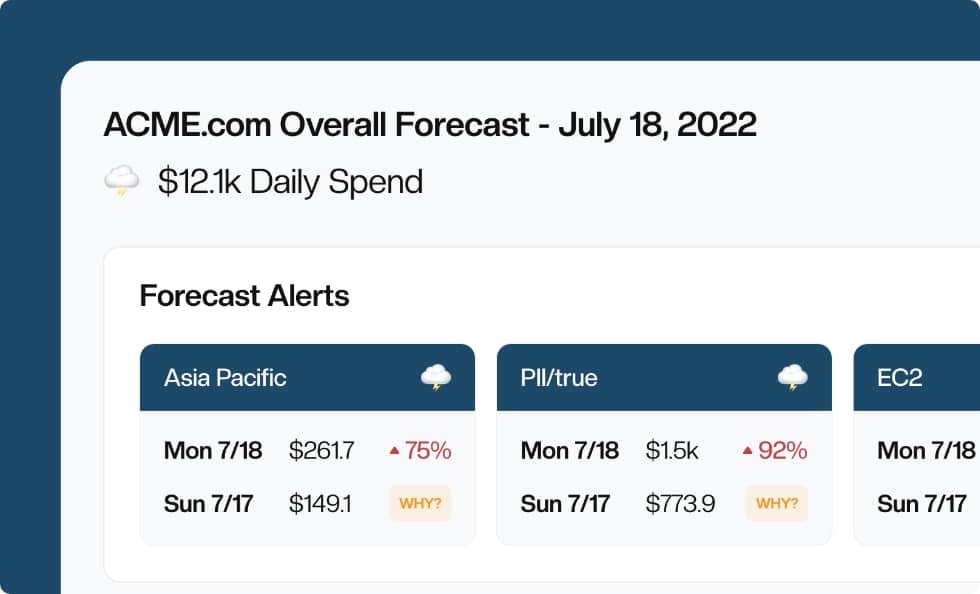

Manage, track, and report your AWS spending in seconds — not hours

CloudForecast’s focused daily AWS cost monitoring reports to help busy engineering teams understand their AWS costs, rapidly respond to any overspends, and promote opportunities to save costs.

Monitor & Manage AWS Cost in Seconds — Not Hours

CloudForecast makes the tedious work of AWS cost monitoring less tedious.

AWS cost management is easy with CloudForecast

We would love to learn more about the problems you are facing around AWS cost. Connect with us directly and we’ll schedule a time to chat!